3D Reconstruction (NERF, Gaussian Splatting) - August 29, 2023

What is it? Recent approaches to 3D capture and reconstruction give us new ways to capture, edit and share spatial data. This page shows a few outputs from experiments I’ve been running with Neural Radiance Field (NERF) rendering and 3D Gaussian Splatting. Most of these captures were made with 50-100 photos from a cell phone camera, and processed on NYU’s High Performance Computing Cluster using open-source tools which implement these popular approaches.

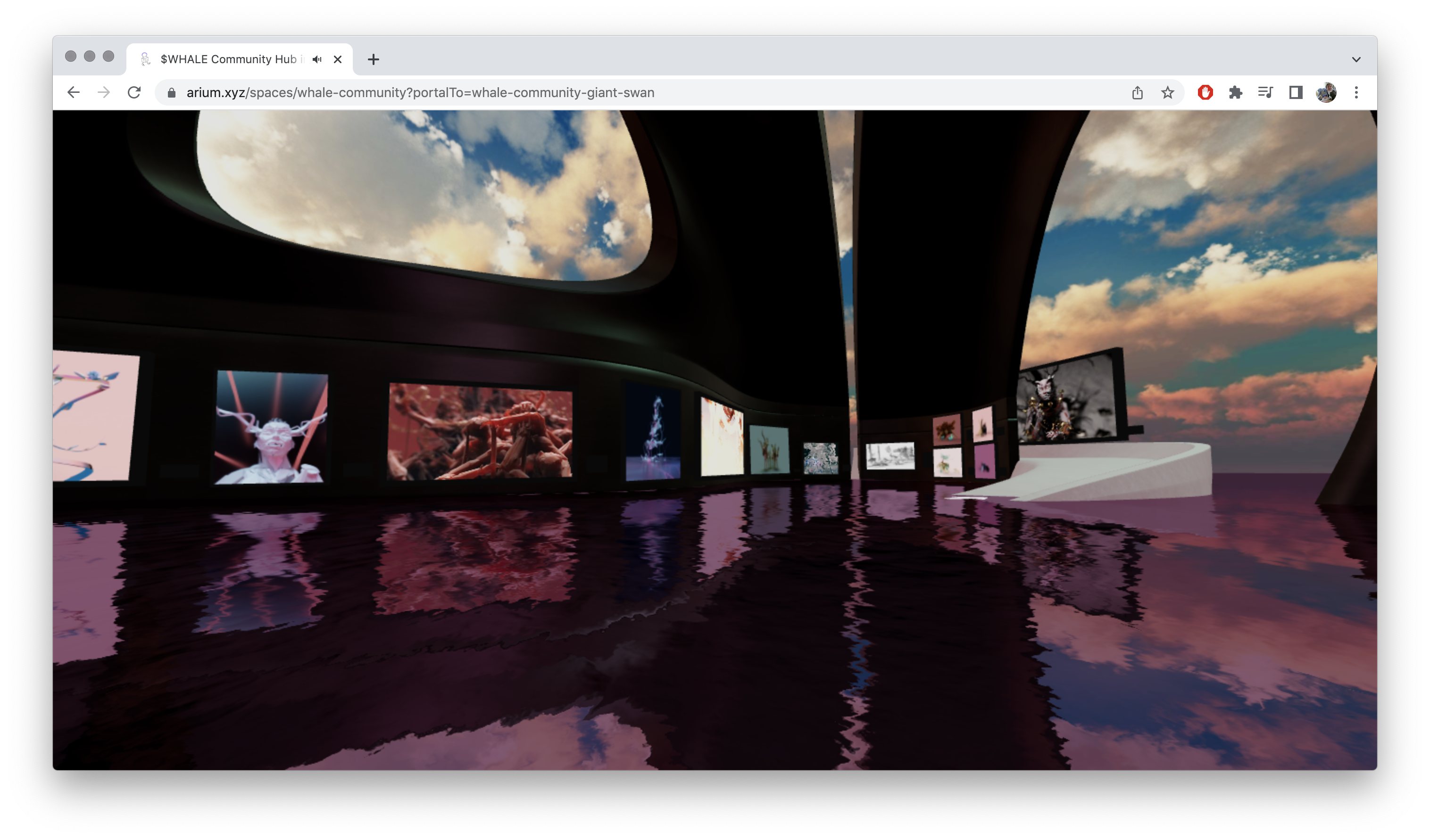

Arium - September 22, 2022

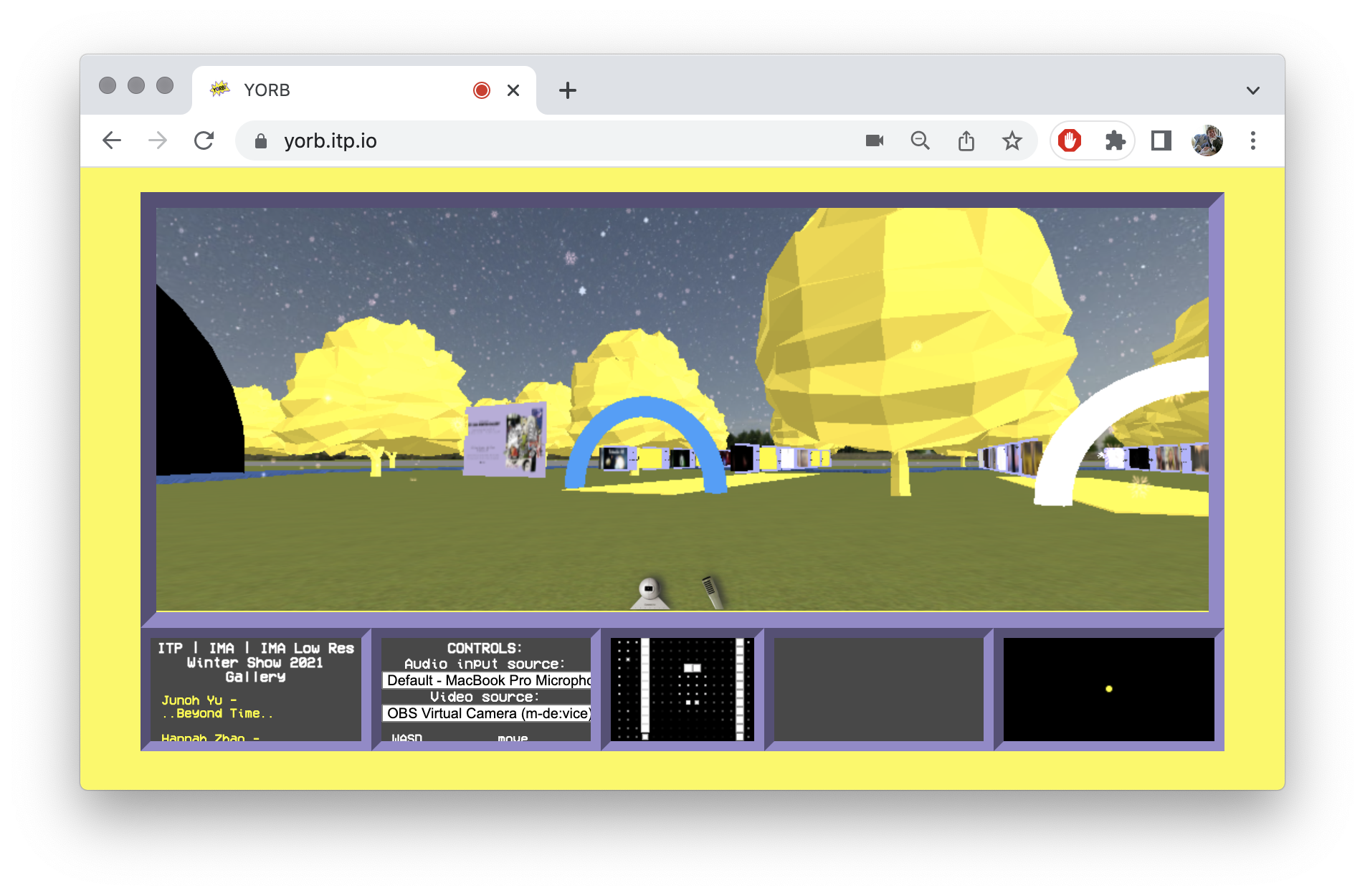

YORB 2020 - June 26, 2020

YORB 2020 is a web application which allows people to communicate together using audio and video while navigating a 3D rendered environment. I started development of this project for the students of New York University’s Interactive Telecommunications Program to provide an alternative to the many existing digital communications platforms they might expect to use daily: Discord, Facebook, Instagram, Slack, Zoom, SMS & messaging apps and email, to name just a few.

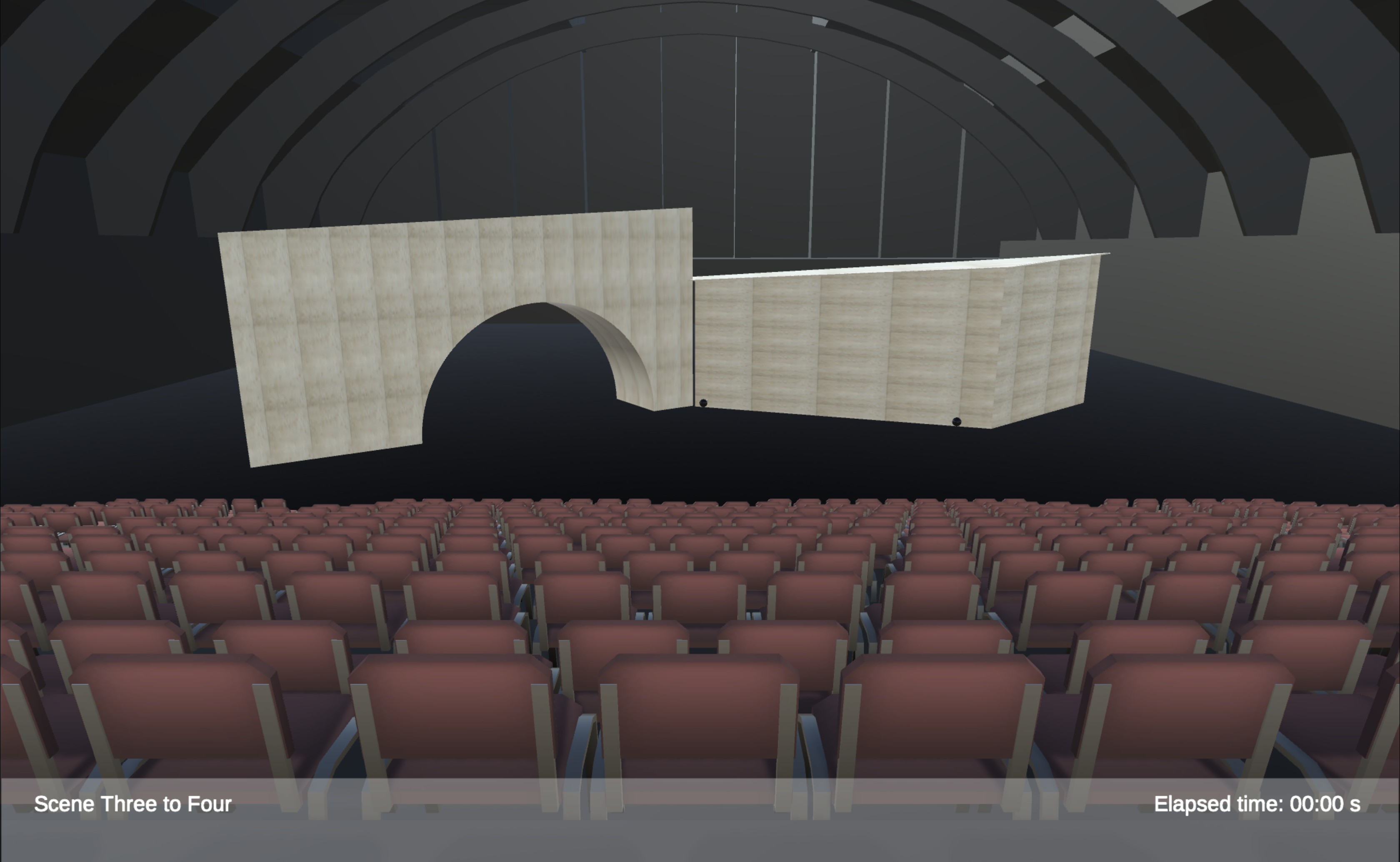

Previsualization Tool: Judgment Day - December 01, 2019

Image from NYTimes Faced with planning a series of complicated scenic transitions involving set pieces the size and weight of a city bus, I worked with the production team at the Park Avenue Armory to develop a previzualization tool. This tool allowed the productions’ choreographer, composer, director and set designer to align their expectations and desires for key transition moments within this play. Once set, this tool helped train the 6 stagehands to operate heavy-lift electric tuggers to move these set pieces safely and in keeping with the choreographic intentions of the creative team.

Eye in the Sky - November 27, 2019

Eye in the Sky is an interactive tabletop simulation in which audience members can engage with situations from the AMC Network series The Walking Dead and Fear the Walking Dead. This project was presented at NYC Media Lab Summit 2019 as part of a grant from AMC Networks exploring Synthetic Media & The Future of Storytelling. Using real-world location data, agent-based modelling, and a computer vision framework, this simulation explores how emerging interactive media tools can allow audiences to develop and follow their own storylines within The Walking Dead universe.

Motion Platform - November 19, 2019

Brooklyn Research Fellowship During a fellowship at Brooklyn Research, I came across a dusty steel platform, replete with motors, linkages, multipin connectors and a power supply large enough to pique my interest. It turned out to be a 3 degree of freedom (DOF) motion platform which – lacking both a hardware and software interface – was sitting entirely unused. This video is a brief overview of the work I did (along with Brooklyn Research’s support) to resurrect this motion platform and provide an extensible, reusable software library for its ongoing use:

Workflow: Unity to TensorflowJS - November 17, 2019

Click on the gif below for a live version: Reinforcement learning is exciting. It also is quite difficult. Knowing both of these things to be true, I wanted to find a way to use Unity’s ML-Agents reinforcement learning framework to train neural networks for use on the web with TensorFlow.js (TFJS). Why do this? Specifically, why use Unity ML Agents rather than training the models in TFJS directly? After all, TFJS currently has at least two separate examples of reinforcement learning, each capable of training in the browser with TFJS directly (or somewhat more practically, training with TensorflowJS’s Node.

Refracting Rays - April 22, 2019

How light interacts with surfaces, lenses and our visual perception is fundamental to how visual arts are created and perceived. Despite this importance, education around basic optical principles tends to employ a science-first approach which may not resonate within an artistic community. This installation attempts to bridge that gap by encouraging audience members to holistically engage with optics and the phenomenon of refraction. This installation consists of a series of engagements with playful and impractical lenses.

Library of Places - April 17, 2019

I made this prototype as part of Jer Thorp’s “Artists in the Archive” course, in which we developed engagements with the US Library of Congress’ open digital resources. This new search ‘vehicle’ for the Library of Congress’ unfathomably massive photo archive uses the analog of a roadtrip as a search through these collections. As you plot a route on the map, photos from the archive taken along that route are displayed.

p5.js WebGL Improvements - April 16, 2019

New features debugMode() and orbitControl() give a clear sense of 3D space. I participated in Google Summer of Code in 2018, contributing to the open source library p5.js on their WebGL (3D Graphics) Implementation. p5.js is an open source javascript library for creative coding started by the Processing Foundation with the express goal of ‘making coding accessible for artists, designers, educators, and beginners.’ With this in mind, I proposed and made several changes to the Camera API and added several debugging features to aid the beginner programmer’s transition from working in 2D to 3D.